In the scenario of a regular online video or picture deepfake, an algorithm starts with a video clip or graphic one person, then swaps the person’s experience for the confront of anyone else. Even so, instead of swapping faces, deepfake technologies can improve a person’s facial expressions. Both equally of these abilities have the possible for nefarious apps. Deepfake technological innovation could be employed to medical professional or develop a online video of someone performing or speaking in an unscrupulous way for purposes of character assassination motivated by revenge, political get, or cruelty. Even the likelihood of deepfakes undermines belief in video, graphic, or audio evidence. Some scientists have responded by building methods to detect deepfakes by leveraging the very same machine understanding technologies that makes them possible.

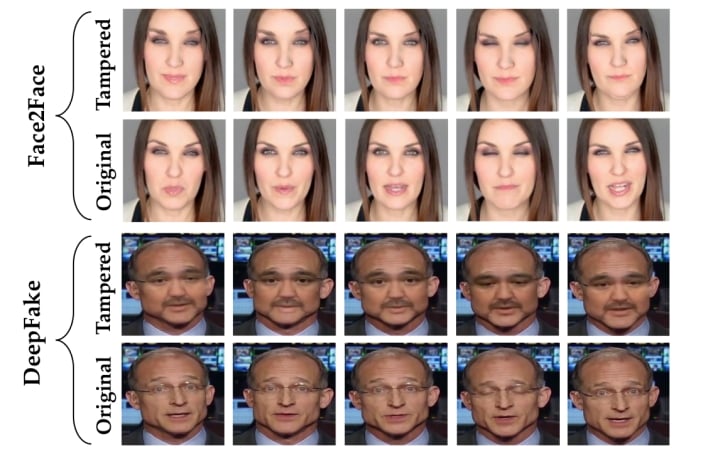

The new framework, which the computer researchers named “Expression Manipulation Detection” (EMD), initial maps facial expressions, then passes that information and facts on to an encoder-decoder that detects manipulations. The framework is ready to point out which parts of a facial area have been manipulated. The researchers utilized their framework to the DeepFake and Facial area2Experience datasets and had been ready to obtain 99% accuracy for detection of the two identification and expression swaps. This paper offers us hope that automated detection of deepfakes is a real chance.

More Stories

Must-Have Technology for a Smarter Home

Mind-Blowing Technology Innovations You Must See

The Hottest Technology Trends Right Now