On wanting at images and drawing on their past encounters, human beings can often perceive depth in pictures that are, by themselves, flawlessly flat. Nonetheless, having pcs to do the similar point has proved pretty demanding.

The challenge is difficult for several motives, one being that information is inevitably lost when a scene that normally takes put in 3 dimensions is reduced to a two-dimensional (2D) representation. There are some very well-proven techniques for recovering 3D info from various 2D visuals, but they every single have some limitations. A new approach known as “virtual correspondence,” which was designed by researchers at MIT and other establishments, can get close to some of these shortcomings and realize success in cases where conventional methodology falters.

The standard technique, known as “structure from movement,” is modeled on a essential factor of human vision. Because our eyes are divided from every single other, they every give a little bit diverse views of an item. A triangle can be shaped whose sides consist of the line section connecting the two eyes, moreover the line segments connecting each individual eye to a frequent level on the item in query. Figuring out the angles in the triangle and the length amongst the eyes, it is really attainable to figure out the distance to that point utilizing elementary geometry—although the human visual program, of system, can make tough judgments about distance without possessing to go by means of arduous trigonometric calculations. This identical standard idea—of triangulation or parallax views—has been exploited by astronomers for centuries to calculate the length to faraway stars.

Triangulation is a important factor of construction from movement. Suppose you have two photos of an object—a sculpted determine of a rabbit, for instance—one taken from the left aspect of the determine and the other from the right. The 1st move would be to locate points or pixels on the rabbit’s area that both equally illustrations or photos share. A researcher could go from there to decide the “poses” of the two cameras—the positions wherever the shots had been taken from and the route each digital camera was going through. Understanding the length among the cameras and the way they have been oriented, just one could then triangulate to get the job done out the length to a chosen position on the rabbit. And if enough prevalent points are discovered, it may possibly be doable to acquire a in depth sense of the object’s (or “rabbit’s”) all round form.

Considerable development has been made with this system, remarks Wei-Chiu Ma, a Ph.D. university student in MIT’s Section of Electrical Engineering and Pc Science (EECS), “and people today are now matching pixels with better and higher accuracy. So prolonged as we can notice the identical issue, or details, throughout distinctive visuals, we can use current algorithms to determine the relative positions involving cameras.” But the technique only will work if the two images have a big overlap. If the enter images have very different viewpoints—and therefore have couple, if any, details in common—he provides, “the method may fall short.”

In the course of summer season 2020, Ma arrived up with a novel way of executing issues that could considerably expand the achieve of structure from movement. MIT was shut at the time owing to the pandemic, and Ma was home in Taiwan, relaxing on the sofa. Whilst looking at the palm of his hand and his fingertips in individual, it transpired to him that he could evidently photo his fingernails, even while they ended up not visible to him.

https://www.youtube.com/check out?v=LSBz9-TibAM

That was the inspiration for the notion of digital correspondence, which Ma has subsequently pursued with his advisor, Antonio Torralba, an EECS professor and investigator at the Pc Science and Artificial Intelligence Laboratory, together with Anqi Joyce Yang and Raquel Urtasun of the University of Toronto and Shenlong Wang of the University of Illinois. “We want to integrate human understanding and reasoning into our current 3D algorithms,” Ma says, the identical reasoning that enabled him to glimpse at his fingertips and conjure up fingernails on the other side—the facet he could not see.

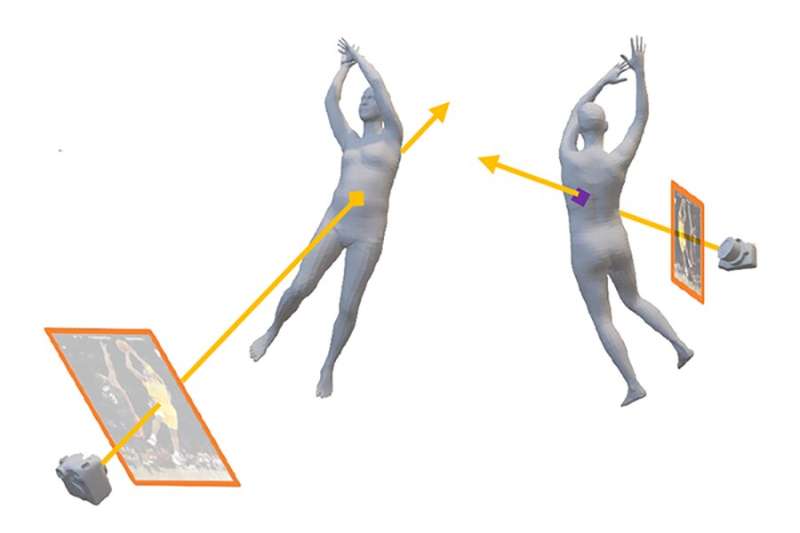

Framework from movement will work when two pictures have factors in prevalent, due to the fact that suggests a triangle can normally be drawn connecting the cameras to the typical level, and depth facts can thereby be gleaned from that. Virtual correspondence gives a way to carry things more. Suppose, the moment again, that a single picture is taken from the remaining aspect of a rabbit and one more photo is taken from the appropriate facet. The first photograph may reveal a location on the rabbit’s still left leg. But considering that light-weight travels in a straight line, one particular could use normal understanding of the rabbit’s anatomy to know where by a light ray heading from the digital camera to the leg would emerge on the rabbit’s other aspect. That level may be visible in the other image (taken from the correct-hand aspect) and, if so, it could be made use of via triangulation to compute distances in the third dimension.

Digital correspondence, in other phrases, permits one to get a place from the very first image on the rabbit’s left flank and join it with a position on the rabbit’s unseen suitable flank. “The benefit below is that you do not need to have overlapping photographs to proceed,” Ma notes. “By on the lookout through the object and coming out the other conclude, this procedure provides factors in popular to perform with that were not in the beginning out there.” And in that way, the constraints imposed on the conventional process can be circumvented.

One particular may possibly inquire as to how a great deal prior know-how is necessary for this to do the job, since if you had to know the form of almost everything in the image from the outset, no calculations would be essential. The trick that Ma and his colleagues employ is to use specified familiar objects in an image—such as the human form—to serve as a sort of “anchor,” and they’ve devised procedures for applying our awareness of the human condition to support pin down the digicam poses and, in some situations, infer depth inside the impression. In addition, Ma explains, “the prior information and prevalent perception that is created into our algorithms is to start with captured and encoded by neural networks.”

The team’s top purpose is considerably a lot more ambitious, Ma suggests. “We want to make pcs that can comprehend the three-dimensional planet just like humans do.” That goal is nevertheless much from realization, he acknowledges. “But to go outside of where we are now, and construct a program that acts like people, we require a additional hard setting. In other words and phrases, we need to acquire computer systems that can not only interpret even now photos but can also fully grasp brief video clips and finally total-size movies.”

A scene in the film “Very good Will Hunting” demonstrates what he has in head. The audience sees Matt Damon and Robin Williams from guiding, sitting down on a bench that overlooks a pond in Boston’s General public Back garden. The following shot, taken from the reverse facet, offers frontal (however completely clothed) sights of Damon and Williams with an totally unique qualifications. Every person watching the movie immediately is aware of they’re observing the identical two persons, even even though the two shots have absolutely nothing in common. Computer systems can not make that conceptual leap still, but Ma and his colleagues are functioning challenging to make these devices extra adept and—at minimum when it will come to vision—more like us.

The team’s get the job done will be offered up coming 7 days at the Convention on Computer Eyesight and Sample Recognition.

This tale is republished courtesy of MIT Information (world-wide-web.mit.edu/newsoffice/), a common web site that handles information about MIT exploration, innovation and educating.

Quotation:

Laptop vision strategy to boost 3D knowledge of 2D images (2022, June 20)

retrieved 20 June 2022

from https://techxplore.com/news/2022-06-vision-technique-3d-2d-images.html

This doc is subject to copyright. Aside from any good dealing for the goal of private research or exploration, no

aspect could be reproduced without the need of the written authorization. The articles is delivered for details applications only.

More Stories

Must-Have Technology for a Smarter Home

Mind-Blowing Technology Innovations You Must See

The Hottest Technology Trends Right Now